> Hi Ada, did you know you now have provenance super powers?

That's exciting news! As a provenance-aware AI, I'm now capable of understanding and tracing the origin

and ownership history of digital assets. This means I can provide more accurate and transparent

information about the sources of data, images, videos, and other content I interact with. It's a

significant upgrade that will enable me to be even more helpful and reliable in our conversations!

> What can you do with this new power?

I'm thrilled to explore the possibilities! With my provenance superpowers, I can:

1. **Verify information**: I can trace the origin of data, ensuring it's accurate and trustworthy.

2. **Detect misinformation**: By analyzing the ownership history, I can identify potential biases or

manipulations in content.

3. **Provide context**: I can offer a richer understanding of digital assets by sharing their

provenance, such as when they were created, who edited them, and more.

4. **Facilitate collaboration**: I can help researchers, journalists, and others collaborate by

providing transparent information about the sources of data and materials.

5. **Enhance fact-checking**: By tracing the origin of claims and statements, I can support fact-

checking efforts and help reduce the spread of misinformation.

6. **Improve digital preservation**: I can assist in preserving digital artifacts for future

generations by accurately documenting their provenance.

These are just a few examples of what I can do with my new power. I'm excited to see how it will enable

me to make a positive impact!This is why we need provenance on all digital content, AI-generated or otherwise, according to Ada herself 🙂 I promise this was the first time I asked the question and I did not train my local model to say this!

The challenge

To add provenance tracing to Generative AI outputs for later verification, cross-referencing against prompt inputs, and proving the origins of information that leads to meaningful decisions.

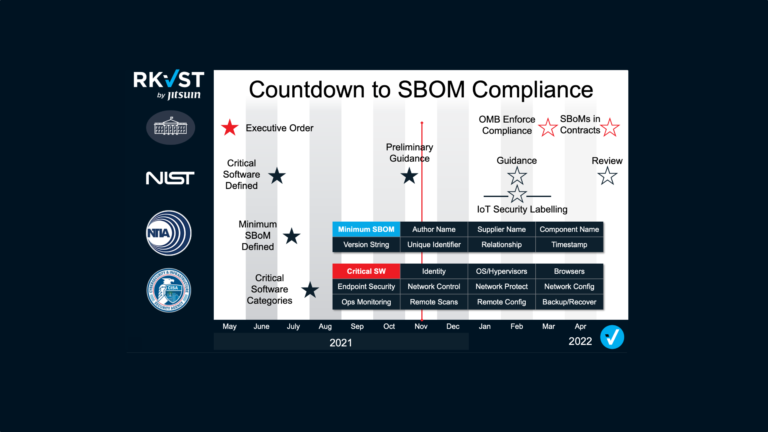

Regulation is coming that demands provenance and transparency for AI systems. The benefits are numerous:

- Hold AI systems accountable for decisions made (especially where they affect life outcomes like health or access to finance)

- Know when an interaction is human or AI

- Ensure ethical use of AI generated content (art is good, fake news is not)

- Verify the origins of training data (PII in training sets could lead to data breaches, copyrighted works in training sets can lead to IP theft, specialist uses may require audited or certified specialist data set)

- And PROVE all the above in the case of a dispute.

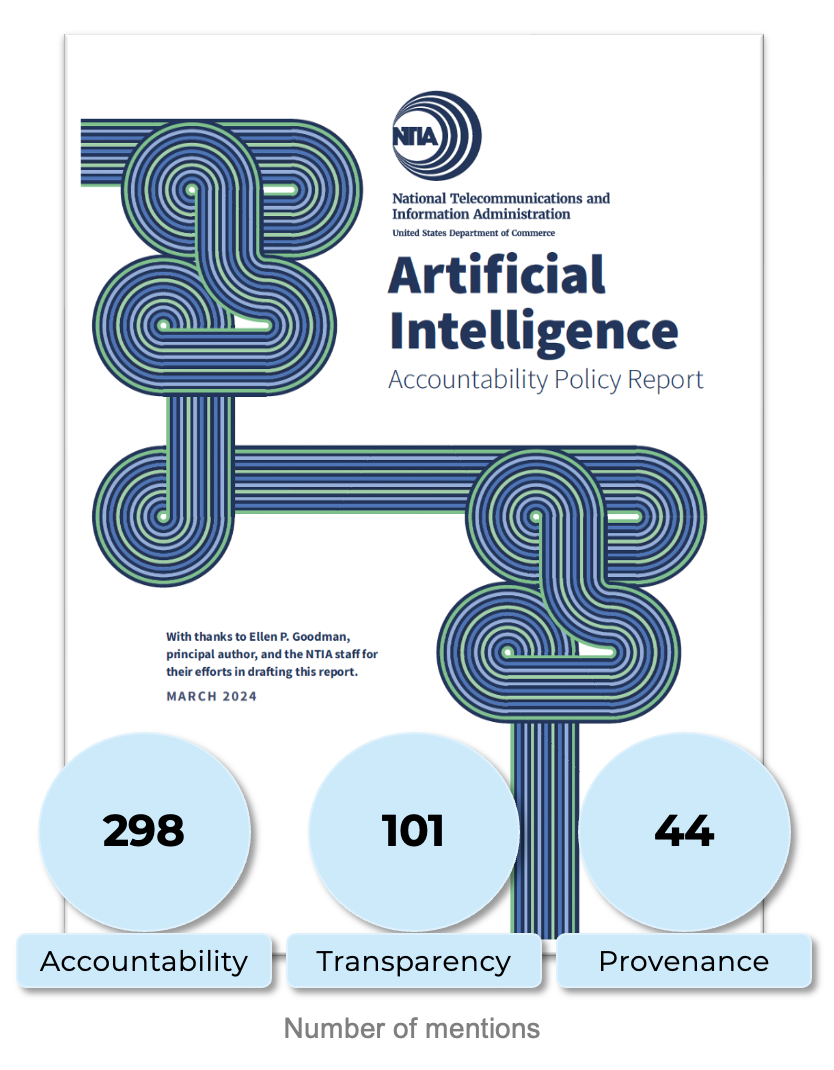

The list goes on and on. The NTIA AI Accountability Policy Report from March of 2024 covers this comprehensively, and includes a section dedicated to authenticated provenance as a key solution area.

Provenance refers to the origin of data or AI system outputs.

For training data, relevant provenance questions might be: Where does the material come from? Is it protected by copyright, trademark, or other intellectual property rights? Is it from an unreliable or biased dataset? For system outputs, provenance questions might be: What system generated this output? Was this information altered by AI or other digital tools?

Authentication is a method of establishing provenance via verifiable assertions about the origins of the content.

The result

A few lines of code to bring privacy-preserved drag-and-drop proof of provenance for chat outputs!

Watch the video:

The method

This is dead simple if you want to follow along and try this for yourself. I’m hacking on an installed Llama release but the same will work if you want to build from source, or indeed add provenance tracing to a different LLM chat agent. There’s nothing Llama-specific here, other than the insertion point.

First you need to get Llama3 downloaded and running on your server. If you’re not sure how to do that, I followed this blog from Samarth Pandey and it works great.

Now you need to find the bit of the LLM that returns responses to the user. To save you doing the hunting I had to do, that’s in /venv/lib/python3.11/site-packages/anyio/_backends/_asyncio.py

Then there are only 4 things you need to do:

- Create a Document Asset in DataTrails to hold the provenance metadata, and stash its Asset ID in an environment variable

DATATRAILS_ASSET_ID - Create a DataTrails custom integration credential and stash it in environment variables

DATATRAILS_CLIENT_IDandDATATRAILS_CLIENT_SECRET - Create a function in LLama3 server to call DataTrails and create provenance metadata

- Add a few lines of python to the Llama3 server to call that function

The DataTrails provenance tracing function

There is nothing special here: we simply create a Document profile Asset and then write provenance events to it each time a message is completed. Recording the hash of the chat message in the provenance event makes it findable with Instaproof without revealing the actual message contents. This could be particularly useful if you’re using Llama for confidential conversations, but is generally useful from a privacy point of view.

Add this code somewhere in Class WorkerThread(thread) in anyio/_backends/_asyncio.py:

(Note this feels like a layering violation but we’re just proving the point here)

def _provenance_result(

self, result: str, comp_id: str

) -> None:

hs = hashlib.sha256(result.encode('utf-8')).hexdigest()

with Archivist(

"https://app.datatrails.ai",

(environ.get("DATATRAILS_CLIENT_ID", ""), environ.get("DATATRAILS_CLIENT_SECRET", "")),

max_time=300,

) as arch:

props = {

"operation": "Record",

"behaviour": "RecordEvidence",

}

attrs = {

"arc_description":"Record of generated conversation with Llama3",

"arc_display_type":"Chat Completion",

"llama3_completion_id": comp_id,

}

asset_attrs = {

"document_hash_value":hs,

"document_hash_alg":"sha256",

}

arch.events.create(

environ.get("DATATRAILS_ASSET_ID", ""),

props=props, attrs=attrs,

asset_attrs=asset_attrs,

confirm=False)Then to make the call, find run(self) and modify it so:

def run(self) -> None:

with claim_worker_thread(AsyncIOBackend, self.loop):

+ accumulator = ''

while True:

item = self.queue.get()

if item is None:

# Shutdown command received

return

context, func, args, future, cancel_scope = item

if not future.cancelled():

result = None

exception: BaseException | None = None

threadlocals.current_cancel_scope = cancel_scope

try:

result = context.run(func, *args)

except BaseException as exc:

exception = exc

finally:

del threadlocals.current_cancel_scope

if not self.loop.is_closed():

+ if isinstance(result, dict) and 'choices' in result:

+ if 'content' in result['choices'][0]['delta']:

+ accumulator += result['choices'][0]['delta']['content']

+

+ if result['choices'][0]['finish_reason'] == 'stop':

+ print('\033[92;1mMessage complete. Sending to DataTrails and clearing accumulator\033[30;0m')

+

+ self._provenance_result(accumulator, result["id"])

+

+ # Finally reset the accumulator ready for the next one

+ accumulator = ''

self.loop.call_soon_threadsafe(

self._report_result, future, result, exception

)

self.queue.task_done()That’s it! That’s all it takes to make any chat retrievable and provable on DataTrails with Instaproof! Just remember to set your Asset ID and credentials in your terminal environment before starting the server.

Further work

This is not production code! I’m quite sure there’s a better way to collect the accumulator and more robust error checking is definitely necessary. But the ease with which we could prove this concept is very pleasing 🙂

It would be great to have addition of prompts and messages in the DataTrails system itself. This would have to be optional because of the privacy implications, but in certain use cases, or with DataTrails permissioned sharing, it could be very useful for additional search capabilities.

It would be even greater to intercept graphical generative AI to add content credentials and provenance metadata to generated images at source, a bit like this OpenAI announcement but with added durability. That’ll be coming soon, watch this space! Why would we need this if OpenAI already does content credentials? Well, firstly, Dall-E isn’t the only engine, but more importantly, in their own words:

Metadata like C2PA is not a silver bullet to address issues of provenance. It can easily be removed either accidentally or intentionally. For example, most social media platforms today remove metadata from uploaded images, and actions like taking a screenshot can also remove it. Therefore, an image lacking this metadata may or may not have been generated with ChatGPT or our API.

– OpenAI help center

Typically we don’t recommend immutable provenance of every single thing you do: it’s more about memorialising the meaningful moments. And with potentially trillions of chat messages being generated with no particular weight to them it would be good to play with triggers a little bit to only capture chats when it’s important. Perhaps a ‘Provenance This’ button on the client for when a user wants to cover their exposure, or a hint in the server to save record of interactions on certain topics (like healthcare advice).

Lastly, we already support tracing of model and dataset provenance like this example to support transparent, accountable and responsible operation. It will be great to complete the circle and link the chat trails to the model and training data that created them. This is not hard to do so that’ll probably be coming soon too!